If you work online, you need web analytics tools to understand user behavior in order to make smarter decisions. Yet determining which web analytics software your organization should use can be terrifying. Countless analytics programs exist—Google Analytics, Adobe, Mixpanel, Crazy Egg, Piwik, Alexa, Quantcast, KISSmetrics, Clicktale, Tealeaf, Similarweb, Amplitude, Fullstory (we hope) to any number of other tools—and open source web analytics tools, too.

How do you decide which tool(s) to pick—and is there really such a thing as a "free" analytics tool? What is the real cost of web analytics tools?

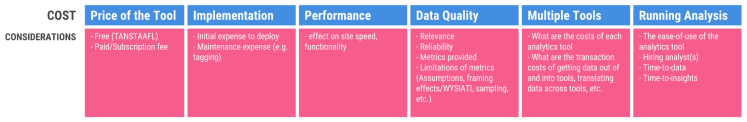

It's more complicated than it seems, so we're sharing a framework for analyzing the fully-baked cost of web analytics tools. It covers everything from the sticker price, to implementation costs, impacts on performance, data quality (relevance, reliability, and cognitive bias), and even the costs your tools create when it comes to running analyses.

There's much to consider beneath the surface of any given analytics tool, so buckle up—that fancy, "free" analytics tool may cost more than you think.

Spoiler alert: there's no such thing as a free analytics tool.

Analyzing the Cost of Web Analytics Tools—a Framework.

1. The cost of the tool (or tools), itself.

The “sticker price” of a particular tool is the most easily quantifiable cost of data analysis — and it’s also where discussion around analytics tools can get stuck.

Many analytics tools are free — i.e. there is no monthly fee to use them. Google Analytics is free. Fullstory offers a free version, too, as do many other analytics platforms.

Outside of a free tool, there are, of course, paid, subscription-based web analytics software options. These paid services can vary dramatically in price depending on lots of factors.

When estimating the cost of web analytics, “free” or “paid” should only be the beginning. Don’t get stuck on the sticker price of a tool — particularly if that price is “free.”

Have you ever heard the expression, “There ain’t no such thing as a free lunch?” “TANSTAAFL” is a popular phrase in economics that reminds us that everything has a cost.

Free web analytics tools don't exist. TANSTAAFL. Why? Because it's all the other costs that make web analytics tools expensive. Consider the costs to implement the tool, its effect on your site or app's performance, data quality costs, integration costs, and the costs to extract the insights—these are the real underlying factors that make web analytics tools expensive.

So start at the sticker price, but keep going.

2. The cost to implement the tool.

No magic wand exists that will install a given analytics tool, and implementing a tracking code across your web site or app can get downright complicated.

Depending on how your site or app is built, it can require any number of code injections — from putting code into proprietary, one-off pages to including it in the headers of templates, into widgets in Content Management Systems, etc.

Managing implementation never really stops — as your site or app grows and changes, it has to be maintained. The more complexity baked into your analytics tool, the more expensive maintenance can be.

Estimating the cost of implementation requires identifying what team or individual will own the process and factoring in the cost of their time. The cost to install and maintain analytics code can be measured by in terms of both salary and the work they aren’t doing — time spent maintaining analytics code is time not spent fixing bugs, improving the product, etc.

3. The cost of the tool on performance.

Every bit of code on your site or app has the potential to effect performance. Performance — site speed — can have a subtle, yet profound effect on the online experience for your site users.

Much has been written on this subject and it’s common knowledge that performance matters, but consider one fact: Google has found that a mere 400ms delay in search results can result in a 0.44 percent drop in search volume. Given Google is seeing some 2 Trillion searches per year, that 0.44 drop could mean a 9 Billion decrease in searches.

Site performance matters to your bottom line, so the impact of a given analytics tool (or a number of tools) on the speed and performance of your site or app can be measured in terms of bouncing users, missed sales, and lower KPIs.

Equip your team with the insights they need to improve the digital customer experience.

Request a Fullstory demo to get started.

4. The cost of low quality data.

This is a big one.

What you pay in terms of data quality is a hard to measure — data quality pivots on many factors from basic things like the relevance and reliability of the data to more complex factors including our own cognitive hard-wiring. Let’s take a closer look.

Relevance of the data.

Is the data relevant to the problems you’re looking to solve? Is it reliable? Relevance depends on whether the data received helps answer the question at hand. It also depends on timeliness — by the time you get the data, is it still useful for answering your question. Analytics tools that don't provide real-time data become immediately less relevant.

There's another aspect to "real-time stats," which is the time it takes to get the data out of the tool. If the data you need isn't immediately accessible or extractable for some reason, it can extend the time it takes to get at the information. If it takes too long, will it still make a difference to your decisions?

This is why Google is a relevance machine: you ask the search engine a question and instantly receive highly relevant search results. Decisions are easier to make when analytics come in real-time.

Reliability of the data.

The metrics provided by a given analytics tool have a strong-yet-subtle effect on the quality of data because metrics have built-in assumptions that frame your understanding. Let’s unpack this.

» What you see is all there is.

In Daniel Kahneman’s book Thinking Fast and Slow, Kahneman shares research that supports the idea that people make decisions using only the basic information available to them — without considering what’s not explicitly known. “What you see is all there is” or “WYSIATI” is a way to remember this source of overconfidence in behavior. As it applies to web analytics, the tendency is to focus on what you know. In that way, the data that analytics tools present becomes authoritative — the singular basis for decisions — and can lead to “jumping to conclusions” without looking for gaps in your information.

» Framing effects.

Framing effects take into consideration how the presentation of information effects how that information is interpreted. Consider the following example of a framing effect — ask yourself, which phrasing sounds more positive? More negative? 90% of users ignored the new feature on your website over the first week after launch -VS- 10% engaged with the new feature in the first week.

Framing effects are a major focus of behavioral psychology because how information is framed matters greatly to decisions. On the web, the effect of framing is far-reaching — consider the default “opt-in/out” switch on a sign-up form being flipped “on” instead of “off.” If it’s toggled to the “on,” position, you are all-but-guaranteed to have more people opt-in by default.

» Assumptions.

Anyone who spends time with data understands that the pretty graph or clean metric comes with a list of assumptions and caveats. As the saying goes, “The devil is in the details.” Meanwhile, given that analysis is conducted to “figure something out,” the person crunching the numbers has the ability to bias the results based on their perspective. In the academic community, Nobel prize winning economist Ronald Coase may have summed up this phenomenon best in saying:

“If you torture the data long enough, it will confess.”

— Ronald Coase

Indeed, if you’ve worked around analysts (or are one!) for any length of time, you will inevitably hear the question, “Well, what do you want the numbers to be?”

Meanwhile, settling on a specific metric asserts its validity as a basis for decision-making. You are tied to that metrics' built-in assumptions. Depending on the metric, this can be a problem. Metrics that aggregate data come with their own set of assumptions. For example, using averages without consideration for the nature of the underlying data can lead to bad decisions.

For web analytics tools, bounce rate is a great example of how an assumed metric frames and limits the way you make decisions. Bounce rate captures what percentage of users land on a page only to leave the site.

Bounce rate, which is simple in theory, is complicated in application. For one, the “framing effect” built-in to bounce rate is that a high bounce rate is “bad.” However, given the number of single-page apps, dynamic pages with infinite scroll, and other common nuances within any given web site or application, bounce rate can be downright irrelevant as a standalone metric. By focusing on bounce rate, you have accepted the assumption that bounce rate as a useful metric on which to base decisions (WYSIATI). But in actuality, “it depends” — as is explicitly discussed in Google Analytic’s support documentation on Bounce Rate.

» Sampling.

If your web analytics tool uses sampling, your data quality will unavoidably take a hit. How confident can you be the sampled data reflects the underlying reality?

When it comes to online behavior, edge cases are the rule. Differences in devices (laptops, tablets, mobile devices), browsers, and operating systems, and other factors all combine to make results highly specific to individual users. If a given web analytics tool uses sampling, you have to get comfort on the reliability of those sampling assumptions.

Data quality matters. The cost of low quality data is making poor decisions that cost you time and money. “What you see is all there is” means that your analytics tool stack has a pile of answers ready for you, regardless of whether the answers are any good. If those answers are low quality (or unclear in suggesting what you should do), you find you have no choice but to do additional research through that tool or through looking to other analytics tools.

Turn up the volume on your user behavior data.

See Fullstory’s Digital Experience Intelligence solution for yourself. Try Fullstory for free — for as long as you'd like.

5. The cost of multiple tools.

The perfect, omniscient web analytics tool that has all the answers doesn’t exist (yet!). That means teams must stack together disparate tools in order to fill gaps in their knowledge.

Each tool will be susceptible to the costs detailed above — maintenance, data quality, effects on performance, etc., but in combination, you get the synergistic bonus cost of friction in having to shift across tools, manage multiple subscriptions, understand how to use each tool including knowing which tool is useful and for what, integrate where possible, translate metrics, and on and on.

With multiple tools, costs compound and the cross-tool transaction costs add up quickly.

6. The cost of running analysis.

Last, but certainly not least, is the cost of actually running an analysis using the tool. This cost breaks down into how hard it is to use the tool, whether or not it can be used by decision-makers directly or if you need to hire analysts to do the number crunching. And both of these factors will cost you most in terms of time.

The ease-of-use of the analytics tool.

Can a decision-maker pick up the tool and immediately extract data — get at an insight? How much training is required to make the tool useful? The more complex the tool, the more friction it puts on getting to data.

Hiring analysts.

Ease-of-use directly affects whether or not decision-makers will need to rely on analysts to crunch the numbers. If the value in a tool requires analysts, you have the obvious cost of extra headcount but the less obvious cost of time—time to requisition an analyst or team to run the report you need to make a decision.

Time.

“Time is money” — so the saying goes. Time has come up before in our analysis as it is a primary driver of data quality. Timeliness is required for data to be relevant. How do you measure the value of time? Answering that would require it’s own analysis; however, a simple heuristic works. The value of information is inversely proportional to to the time it takes to get that information.

You can apply this heuristic to web analytics tools in two ways:

Time-to-data. Measured as how long it takes to get at the raw data, “time-to-data” is affected by the complexity of the tool — setup requirements, ease-of-use, how easy it is to get data out of the tool, etc. If you’ve ever hunted for some obscure information only to find a very useful graph that can’t be exported into a table, you know the pain of time-to-data.

Time-to-insights. Unlike time-to-data, time-to-insights is how long it takes to go from raw data to meaningful, which is to say actionable, information. Analytics tools may have incredible data that is easy to extract, hence low time-to-data, but painfully expensive to turn that data into actionable insights.

Let’s take an example. It takes you 20 minutes on average to extract data for a report — that’s your time-to-data. Then, data-in-hand, it takes you two hours to process that data and tease out an insight—that’s your time-t0-insight. Now, repeat that process a few hundred times and if you’re an analyst, factor in the friction of passing on the report only to find that it needs to be re-run for a different time period or some other variable. Time-to-data and time-to-insights add up in a hurry.

Adding up all the costs of your analytics.

Here’s a quick recap of the six buckets to consider when estimating the cost of your web analytics:

Why go into such detail to estimate the cost of an analytics tool?

Because analytical tools are expensive — even if they are “free,” but it’s not at all obvious just how expensive they are. By analyzing the cost of a given web analytics tool (or stack of tools), you can articulate not only how expensive a given tool is, but also why it’s expensive.

(And that's a very analytical thing to do.)

If you’re just adding into your analytics stack yet-another-free-tool — because hey, it’s a free analytics tool, why not? Remember TANSTAAFL. Will the insights you get out your free tool actually be worth the effort of installing, maintaining, and paying attention to that tool? Will the "free" data you get out of the tool be high quality, reliable, relevant?

The kitten may be free but caring for that cute fur ball is another thing entirely.

Let's take one more example—in this case honing on a popular analytics "feature" — heatmaps. Heatmaps are a fun way to visualize aggregated clicks and mouse-tracking on a website—in some cases they even capture attention tracking. The thing is, there are lots of problems with heatmaps that can make them very expensive. Consider there built-in assumptions and the impact those assumptions have on data quality (E.g. how data is aggregated across devices and browsers and how heatmaps deal with dynamic applications).

Additionally, heatmaps can also be costly to configure and implement.

With all that work done (and the assumptions accepted), once you get your heatmap visualizations, you're suddenly stuck with the problem of WYSIATI—in this case, your web heatmaps present you with lots of colorful splotches imply "insights." But are they actually insights?

(Learn more about web heatmaps, how they work, and their built-in assumptions here.)

The cost of a given analytics tool can add up quick, and as exemplified by heatmaps, the costs aren't always obvious (Assumptions, data quality, unclear insights, etc.).

How expensive is your web analytics tool?

Henry Ford famously quipped that, "If you need a machine and don’t buy it, then you will ultimately find that you have paid for it and don’t have it."

When it comes to web analytics tools, costs matter, but no cost is greater than the that of choosing the wrong tool. The easiest way to choose the wrong tool? Only pay attention to the sticker price.

If you need a machine and don’t buy it, then you will ultimately find that you have paid for it and don’t have it.

And whether it’s through developers spending months implementing and maintaining the tools, stakeholders struggling through aggregated reports that lack clarity or obvious actions, poor decisions made from assumption-loaded data, framing effects that limit how you even understand the information, or any number of other problems examined in this analysis, perhaps the juice isn’t worth the squeeze.

Analytics tools only work when they provide reliable, relevant insights that you can act on. The best analytics tools will be clear and actionable, having low time-to-data and time-to-insights.

Keep asking the hard questions about your tools. Run the analysis and discover the real cost of your web analytics stack.

Find out how Fullstory can help you level up your digital experience strategy.

Request a demo or try a limited version of Fullstory for free.